Author: Eldar Sido, Product Marketing Specialist, Renesas Electronics

Neural networks (NNs) have been inspired by the brain and the use of neuroscience terminologies (neurons and synapses) to explain neural networks has always been a source of complaint for neuroscientists, as the current generation of neural networks are polar opposites. to the way the brain works. Despite the inspiration, the general structure, neural calculations, and learning techniques between the current second generation of neural networks and the brain differed greatly. This comparison so upset neuroscientists that they began work on the third generation of networks that were more like the brain, called Spike Neural Networks (SNNs) with hardware capable of running them, namely the neuromorphic architecture.

Spiking of neural networks

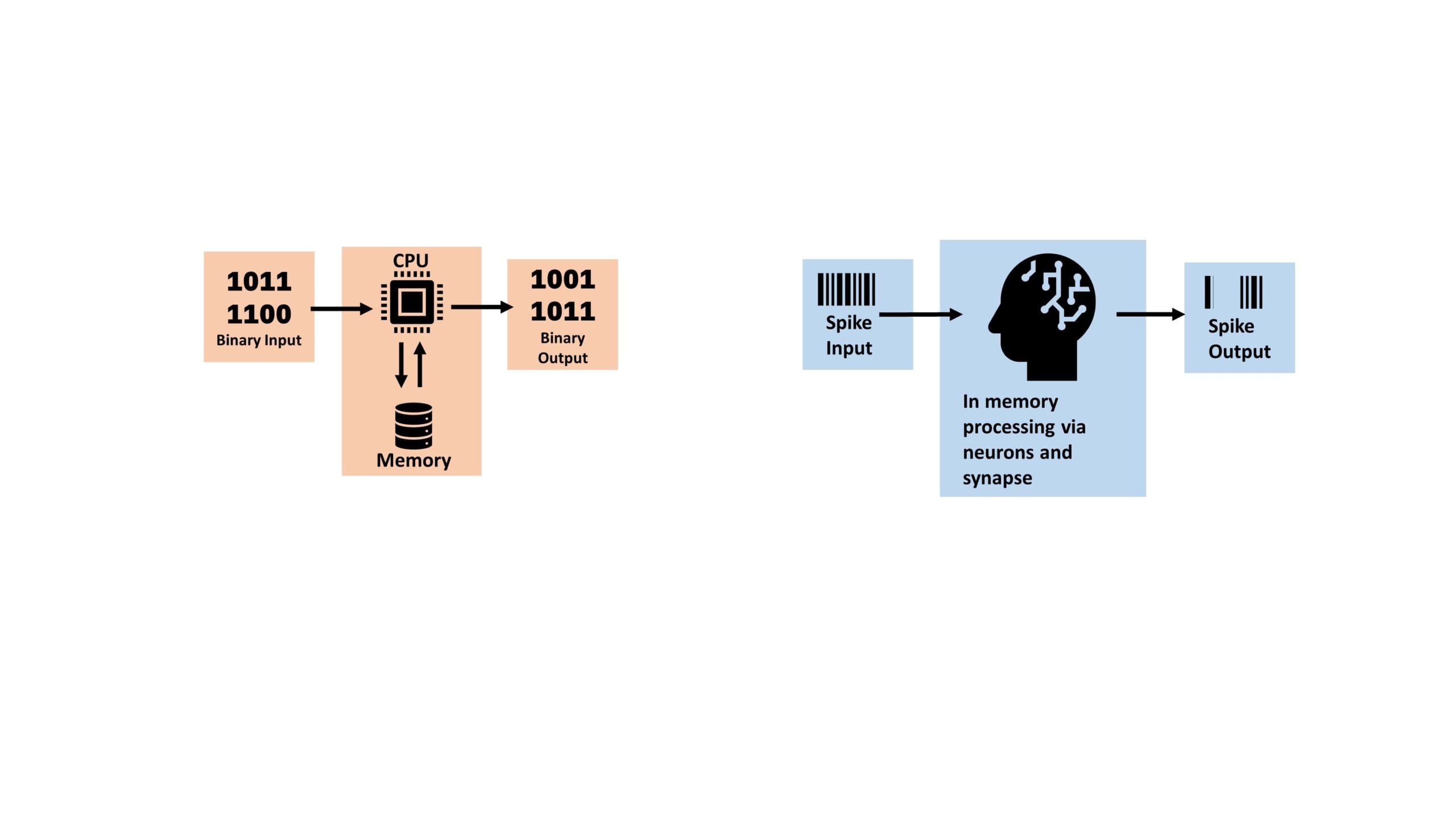

SNNs are a type of artificial neural network (ANN) that are more closely inspired by the brain than their second generation counterpart with one key difference, in that SNNs are spatiotemporal NNs, that is, they consider time in their operation. SNNs operate on discrete peaks determined by a differential equation representing various biological processes. The critical threshold fires after the neuron's membrane potential is reached ("firing" threshold), which occurs when spikes are fired in that neuron at specific time sequences. Analogously, the brain consists of 86 billion computational units called neurons, which receive information from other neurons via dendrites, once inputs exceed a certain threshold, the neuron fires and sends an electrical pulse through of a synapse, and the synaptic weight controls the spread of the pulse sent to the next neuron. Unlike other artificial neural networks, SNN neurons fire asynchronously at different layers of the network and arrive at different times where information traditionally propagates across layers dictated by the system clock. The spatiotemporal property of SNNs, together with the discontinuous nature of the spikes, means that models can be more sparsely distributed with neurons that only connect to relevant neurons and use time as a variable, allowing information to is more densely encoded compared to ANN's traditional binary encoding. Which leads to SNNs being more computationally powerful and more efficient.

The asynchronous behavior of SNNs together with the need to execute differential equations is computationally demanding on traditional hardware and a new architecture had to be developed. This is where neuromorphic architecture comes in.

neuromorphic architecture

Neuromorphic architecture is a non-Von Neuman architecture inspired by the brain, made up of neurons and synapses. In neuromorphic computers, data processing and storage occur in the same region, alleviating the von Neuman bottleneck that slows down the maximum performance that traditional architectures can achieve due to the need to move data from memory to memory. processing units at relatively slow speeds. Furthermore, the neuromorphic architecture natively supports SNNs and accepts spikes as inputs, allowing information to be encoded in spike arrival time, magnitude, and shape. Thus, key features of neuromorphic devices include their inherent scalability, event-based computation, and stochasticity, since firing neurons can have a sense of randomness, making neuromorphic architecture attractive due to its ultra-low power operation, which generally operates at magnitudes less than traditional computer systems.

Neuromorphic Market Forecast

Technologically, neuromorphic devices have the potential to play an important role in the coming era of edge and endpoint artificial intelligence. To understand the expected demand of the industry, it is necessary to look at the research forecasts. According to a report by Sheer Analytics & Insights, the global neuromorphic computing market is expected to reach $780 million with a CAGR of 50,3% by 2028 [1]. Mordor Intelligence, on the other hand, expects the market to reach $366 million by 2026 at a CAGR of 47,4% [2] and much more market research can be found online indicating a similar increase. While the forecast numbers are not consistent with each other, one thing is consistent, the demand for neuromorphic devices is expected to increase dramatically in the coming years and market research firms expect various industries such as industrial, automotive, mobile and medical adopt neuromorphic devices for a variety of applications.

Neuromorphic TinyML

Since TinyML (Tiny Machine Learning) is concerned with running ML and NN on devices with strict memory/processor constraints, such as microcontrollers (MCUs), it is a natural step to incorporate a neuromorphic kernel for TinyML use cases. , as there are several distinct advantages.

Neuromorphic devices are event-based processors that operate on non-zero events. Event-based convolution and dot products are significantly less computationally expensive, since zeros are not processed. Event-based convolution performance is further improved with more zeros in the filter channels or kernels. This, along with trigger features such as Relu being centered around zero, provides the inherent trigger sparseness property of event-based processors, reducing effective MAC requirements.

Also, as the processing of neuromorphic devices increases, more restricted quantization, such as 1, 2, and 4-bit quantization, can be used compared to conventional 8-bit quantization in ANN. Also, since SNNs are embedded in hardware, neuromorphic devices (such as Brainchip's Akida) have the unique On-Edge learning capability. This is not possible with conventional devices, as they only simulate a Von Neumann architecture neural network, making On-Edge learning computationally expensive with large memory overheads, outside the budget of TinyML systems. Also, to train an NN model, integers would not provide enough range to train a model accurately, so it is currently not feasible to train with 8 bits on traditional architectures. For traditional architectures, currently, some edge learning implementations with machine learning algorithms (auto-encoders, decision trees) have reached a production stage for simple real-time analytics use cases, while NNs are still are under investigation.

In summary, the advantages of using neuromorphic devices and SNN On-Edge:

– Ultra low power consumption (milli to microjoules by inference)

– Lower MAC requirements compared to conventional NNs

– Less parameter memory usage compared to conventional NNs

– On-Edge learning capabilities

Neuromorphic TinyML Use Cases

With all said and done, microcontrollers with neuromorphic cores can excel in industry-wide use cases with their distinctive edge-learning features, such as:

- In anomaly detection applications for existing industrial equipment, where using the cloud to train a model is inefficient, so adding an endpoint AI device in the engine and training at the edge would allow for easy scalability since equipment aging tends to differ from machine to machine. Even if they are the same model.

- In robotics, as time goes by, the joints of the robotic arms tend to wear out, misalign and stop working as needed. Retuning the driver at the edge without human intervention mitigates the need to call a professional, reduces downtime, and saves time and money.

- In facial recognition applications, a user would have to add their face to the dataset and retrain the model in the cloud. With just a few snapshots of a person's face, the neuromorphic device can identify the end user through On-Edge learning, allowing users' data to be secure on the device along with a smoother experience. This can be used in cars, where different users have different preferences for seat position, climate control, etc.

- In keyword detection apps, adding additional words for your device to recognize at the edge. It can be used in biometric applications, where a person would add a "secret word" that they would like to keep secure on the device.

The balance between the ultra-low power of neuromorphic endpoint devices and the enhanced performance makes it suitable for extended battery-powered applications, running algorithms that are not possible on other low-power devices due to being computationally limited. Or vice versa, with high-end devices capable of similar processing power consuming too much power. Use cases include:

- Smart watches that monitor and process data at the endpoint, sending only relevant information to the cloud.

- Smart camera sensors for people detection to execute a logical command. For example, the automatic opening of doors when a person approaches, since current technology is based on proximity sensors.

- Area without connectivity or charging capabilities, such as in forests for intelligent animal tracking or monitoring below ocean pipelines for possible cracks using real-time sound, vision and vibration data.

- For infrastructure monitoring use cases, where a neuromorphic MCU can be used to continuously monitor motion, vibration, and structural changes in bridges (via imaging) to identify potential failures.

Conclusions

Renesas, as a leader in semiconductors, has recognized the great potential of neuromorphic devices and SNNs, so we have licensed a neuromorphic core from Brainchip [3], the world's first commercial producer of neuromorphic IP, as noted by Sailesh Chittipeddi , our executive vice president at EEnews Europe, “At the low end, we've added an ARM M33 MCU and a spike neural network with BrainChip core licensed for select applications; we have licensed what we need to license BrainChip, including the software to get the ball rolling.” [4]

Therefore, as we try to innovate and develop the best possible devices on the market, we are excited to see how this innovation will contribute to making our lives easier.