Mark Patrick, Mouser Electronics

Machine Learning Algorithms (ML) have quickly become a part of our lives. Machine learning is a type of artificial intelligence, and it's more common than it seems. These algorithms are so ubiquitous that we don't even know they are there: in mobile assistants, in traffic route planning, in the results we get when doing an Internet search, etc. The use of complex algorithms and groups of multiple interconnected algorithms is becoming more widespread in the industrial, medical and automotive sectors, so it is necessary to understand why an ML algorithm generates a certain result. Explainable AI (xAI) is increasingly used to describe the result of an algorithm and the underlying factors on which the result is based.

In this article we are going to explain what xAI is and why it is an essential element for any new machine learning application.

AI and machine learning in our daily lives

It's hard to pinpoint exactly when AA first became a part of our lives, as there has never been a specific mention or release. Rather, it has been introduced little by little and has become an intrinsic part of our daily interaction with technology. For many of us, the first experience was with the mobile assistant, such as Voice, from Google, or Siri, from Apple. AA quickly became an essential functionality of advanced driving assistance systems (SAAC), such as adaptive cruise control (ACC), lane keeping assist (ALA) and traffic sign identification. There are other applications where AA is also used, although we may not know it. For example, ML is used by financial and insurance companies for various document processing functions, and is used by medical diagnostic systems to detect patterns in MRI and test results.

Machine learning has quickly become a part of our lives, and its ability to enable agile decision making means that we use it very frequently.

How an algorithm makes decisions

The fact that we rely so heavily on decisions made by OA systems has recently raised concerns from some consumer and professional ethics groups.

To understand what a machine learning system does to arrive at a result or a probability, let's briefly examine how it works.

Machine learning uses an algorithm to copy the process of the human brain when making decisions. The neurons of our brain have their equivalent in a mathematical model of our neural network that has the objective of creating an algorithm. Like our brain, the artificial neural network (RNA) algorithm is capable of inferring a result with a certain degree of probability based on the knowledge acquired. The RNA understands things as it gains knowledge, as occurs with a person from birth. Training an ANN is a fundamental part of any machine learning model. Also, there are different types of neural networks for different tasks. For example, a convolutional neural network (CNN) is the best option for image recognition, while a recurrent neural network (RNR) is the best model for managing speech processing. The network acquires knowledge by processing a gigantic amount of training data. In an NCR, it takes tens of thousands of images of different animals, along with their names, to make an animal recognition application. A multitude of images are needed for each species and genus, as well as photographs of different characteristics and with different degrees of ambient lighting. After training a model, the testing phase begins with images that the system has not yet processed. The model manages to infer a result based on the probability of each image, and this probability increases as more training data is introduced and the neural network is optimized.

When inference probability levels in tasks are high enough, application developers can implement the machine learning model.

A simple peripheral industrial application of machine learning is monitoring the state of a motor through its vibration pattern. If we add a vibration sensor (piezoelectric, MEMS, or a digital microphone) to an industrial motor, we can record a detailed set of vibration characteristics. We can add complexity to the training data from motors we have retired with known mechanical faults, such as worn bearings, drive issues, etc. The resulting model can continuously monitor a motor and provide uninterrupted information on its status. Running this type of neural network on a low-power microcontroller is called TinyML.

What is explainable artificial intelligence?

As we have already seen, the result obtained by some ML-based applications has raised concerns that these results were not impartial. The debate over the alleged bias of OA and AI has several components, but the idea that OA results should be more transparent, fair and ethically and morally upright is quite widespread. Most neural networks work like a black box: the box receives data and produces a result with it, but there is no indication of how the result is obtained. In short, it is increasingly necessary for a decision based on an algorithm to explain the basis of its result, and that is where explainable artificial intelligence (xAI) comes in.

In such a short article, we can only talk about the fundamental concepts of xAI, but you will find the white papers from NXP, a semiconductor vendor, and PWC, a management consultancy, informative.

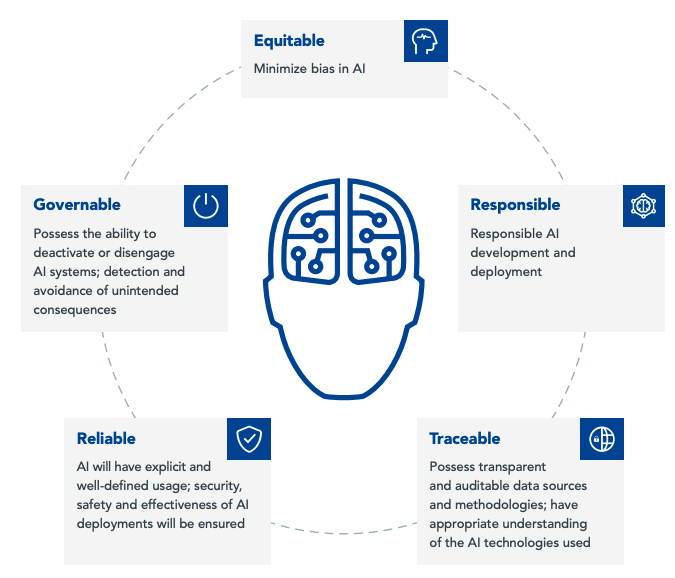

Figure 1 shows a comprehensive approach proposed by NXP to develop a trustworthy and ethical AI system.

To exemplify the requirements of the xAI, we are going to talk about two possible applications.

Control of autonomous vehicles (automotive): Imagine getting into a taxi with a human driver. If the driver is going too slowly, he may ask why. Then, the driver could tell you, for example, that many roads are icy due to the cold and that you have to be extremely careful to prevent the car from skidding. In an autonomous taxi, however, these questions cannot be asked. The car may decide to slow down because a set of machine learning systems (environmental, traction, etc.) have determined that slowing down is prudent, but there should be another part of the autonomous vehicle system to explain the changes. reasons audibly and visually to reassure the passenger.

Patient Condition Diagnosis (Healthcare): Imagine an automated system to speed identification of different types of skin disorders. The app receives a photo of the patient's skin abnormality and sends the result to a dermatologist for treatment. There are numerous types of skin disorders: some are temporary and some are permanent, and some can be painful. The severity can also vary, from something insignificant to a deadly disease. Due to all this variety of disorders, it is possible that the dermatologist thinks that more analysis is necessary before applying a treatment. If the AI application could show the probability of his diagnosis and other inferred high-level results, the specialist could make a decision with more information.

These two examples help us understand why xAI is so important. There are many other ethical and societal dilemmas to consider when it comes to the use of AI and ML in financial services or governance organizations.

When designing machine learning solutions, here are some ideas that developers of embedded systems should keep in mind.

- Does the training data instantiate a sufficiently broad and diverse representation of the item to be inferred?

- Has it been verified that the test data have an equitable representation of all the classification groups and that the number of these is sufficient?

- Is it necessary to explain the inferred result of the algorithm?

- Can the neural network give an answer based on the probability of the outcomes it has excluded?

- Are there any legal or regulatory limitations on the AA app processing data?

- Has the ML application been protected from enemies that could endanger it?

- Can it be said that the AA application is reliable?

Developing machine learning applications

Currently, a large number of embedded developers are working on projects with machine learning capabilities, like the TinyML we talked about at the beginning. However, AA is not limited to edge platforms. The concepts can easily be extended to large industrial facilities. Some examples of these functions are computer vision, condition monitoring or security.

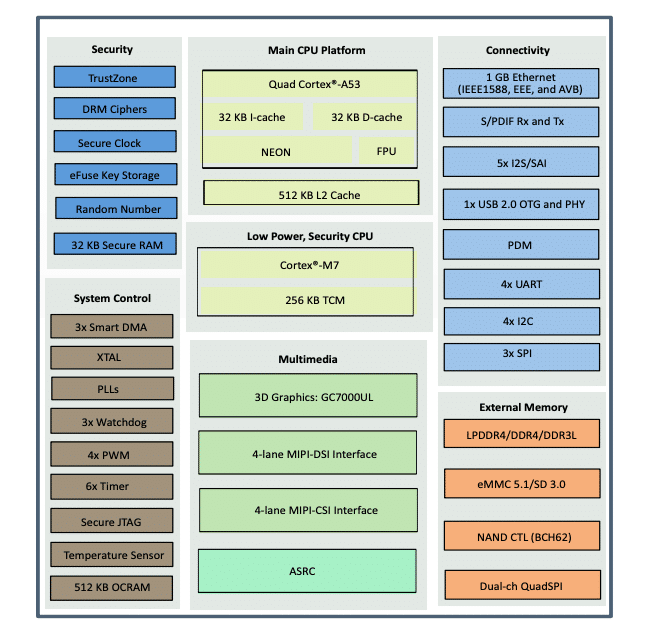

Major semiconductor vendors now have microcontrollers and microprocessors optimized for machine learning applications. One example is the NXP iMX-8M Nano-Ultralite application processors. The Nano-Ultralite (NanoUL) is part of the NXP iMX-8M Plus series and features an Arm® Cortex®-A53 primary quad core, running at up to 1,5GHz, and a generic Cortex core processor. -M7 running at 750 MHz for low-power, real-time tasks.

Image 2 shows the most important blocks of the NanoUL iMX-8M, which include a complete set of connectivity, peripheral interfaces, security functions, clocks, timers, watchdogs and PWM blocks. The compact NanoUL application processor measures 11 x 11 mm.

To assist application developers with the NanoUL iMX-8 Plus, NXP offers the i.MX 8M Nano UltraLite Evaluation Kit (see image 3). This kit consists of a motherboard and a NanoUL processor board, and is a complete platform on which machine learning applications can be developed.

There is already an established ecosystem of machine learning resources, frameworks, and platforms for prototyping AA designs, both with low-power peripheral microcontrollers and high-power microprocessors.

TensorFlow Lite is a variant of Tensor Flow (Google), an open source and corporate ML framework, and is specifically designed for low-power, low-resource microcontrollers. It can run on Arm Cortex-M series cores and only takes up 18KB of memory. TensorFlow Lite offers all the resources needed to deploy models to embedded devices.

Edge Impulse has a more inclusive philosophy: an end-to-end solution that includes inputting training data, selecting the best neural network model for the application, testing, and final deployment on an edge device. Edge Impulse is powered by TensorFlow and Keras, two open source ML frameworks.

Advances in explainable AI

Learning to design and develop embedded ML applications is a great opportunity for embedded systems engineers to improve their knowledge. When thinking about the specifications and operation of the final application, it is also worth considering the application of explainable AI principles in the design. Explainable AI is changing the way we think about machine learning. Embedded system developers can make important contributions by building more context, trust, and reliability into an application.